Have you ever run into a situation where your Drupal 8 development or testing servers were accidentally indexed by a search engine? This quick tutorial is meant to help ensure that never happens again! Today we will talk about two different options for blocking your site from search engine crawlers:

- HTTP Basic Authentication - Setting up basic HTTP Authentication will provide your site with an authentication popup when trying to access the website.

- Robots.txt + Robots Meta - Use web standards to tell search engines not to crawl or index your website.

Option 1: HTTP Basic Authentication

HTTP Basic Authentication is a standard practice to protect your development and testing sites from search engines. There are a few ways to implement this in Drupal 8.

Contributed Module: Shield

The contributed shield module provides the ability to enabled/configure HTTP Basic Authentication on your website from within the admin UI. This approach is easy to configure and works out of the box with Drupal 8. All you have to do is download, enable and configure the module.

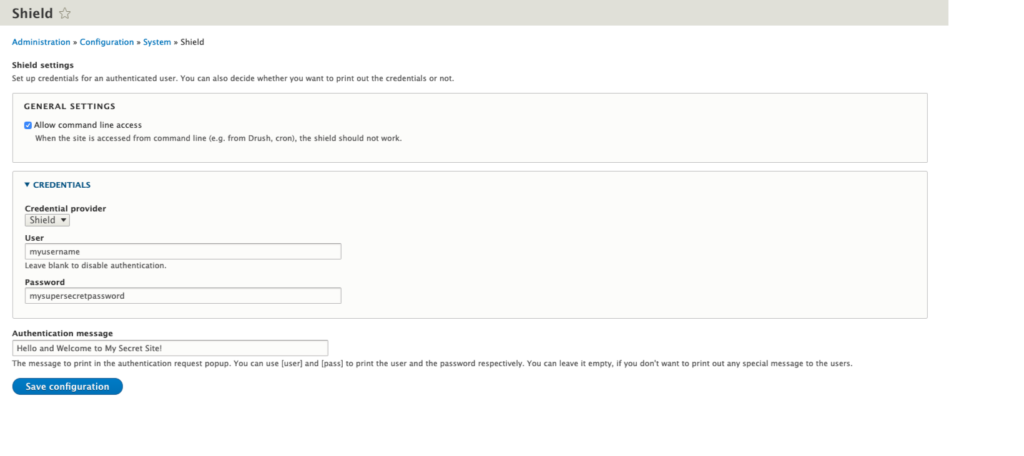

Here is a quick look at the configuration page for the Shield module:

Shield Module Configuration Screen Example

A quick rundown of the settings here:

- Allow Command Line Access: This is important if you plan on using Drush or Drupal Console on the site. Enabling this setting will allow command line functions to continue to run against your website.

- Credential Provider: Unless you have another credential module configured, Shield will be the only option here.

- User: This is the username configured for the HTTP Basic Authentication popup.

- Password: This is password which is configured for the HTTP Basic Authentication popup.

- Authentication Message: This is a message that appears in the HTTP Basic Authentication popup.

Custom Code: Acquia Cloud Example

Some organizations may prefer to have more control over the setup and configuration of the HTTP Basic Authentication. This can also be setup and enabled through your Drupal 8 settings.php module. Below is an example of an implementation tailored for Acquia Cloud hosting:

Why is HTTP Basic Authentication not an ideal solution?

HTTP Basic Authentication works great for keeping both robots and the average user off of your development site. It does however have a few downsides:

- Enabling HTTP Basic Authentication disables caching to ensure that all pages being served up can only be viewed by authorized individuals. This means you will not be able to test that your caching layers are functioning properly on environments protected by HTTP Basic Authentication.

- HTTP Basic Authentication provides a false sense of security. The authentication is not secure by any means and should not be treated as a way to "protect" your website.

Option 2: Robots.txt + Robots Metatag

The 2nd option that we are going to talk about is (properly) utilizing your robots.txt file along with the robots metatag. Many people incorrectly assume that configuring their robots.txt file alone is enough to stop your pages from being indexed. Newsflash, it is not!

Why isn't a properly configured robots.txt file enough?

Most search engine crawlers, like Google and Bing, follow the instructions of the robots.txt file and won’t index the content of the page. Great, right? Well yes, BUT that doesn't mean that your page won't be indexed. Have you ever seen limited listings in your SERP's? These are pages which have a robots.txt file blocking the page content from being indexed, but the search engine still registers the pages in its index.

How do I protect my pages from being indexed?

The answer is simple: tell the search engine crawlers that your page should not be indexed or followed. This can be done by adding a meta tag in the header of your pages. The metatag should look something like this:

<meta name="robots" content="nofollow,noindex">

How can I protect all of my pages from robots in Drupal 8?

As far as we can tell, there is no stable module that provides this functionality out of the box. Its possible we will create one soon, but in the mean time all it takes is a quick implementation of template_preprocess_html() to add this to all of your pages. Here is an example of a hook that would add the robots metatag to your website on the Acquia Cloud development environment.

To go along with this, a properly configured robots.txt file for your development environment will ensure that robots leave your site alone and you don't get random pages you don't want showing up in search results. Here is an example setup for a robots-dev.txt file for your development environment.

And here is a quick snippet to add to your .htaccess file to ensure this file is loaded on your Acquia Cloud development server:

By implementing your robots.txt properly along with the proper robots metatag in your header, you can ensure that your website will not be indexed by search engine crawlers that obey the rules. You also get the benefit of being able to test your sites caching on your non-production environments.

Are you looking for more help with your Drupal 8 website? Contact us today and find out how we can help!